Sound Synthesis in Swift: A Core Audio Tone Generator

Join the DZone community and get the full member experience.

Join For FreeThe other day, Morgan at Swift London mentioned it may be interesting to use my Swift node based user interface as the basis for an audio synthesiser. Always ready for a challenge, I've spent a little time looking into Core Audio to see how this could be done and created a little demonstration app: a multi channel tone generator.

I've done something not too dissimilar targeting Flash in the past. In that project, I had to manually create 8192 samples to synthesise a tone and ended up using ActionScript Workers to do that work in the background. After some poking around, it seems a similar approach can be taken in Core Audio, but to create pure sine waves there's a far simpler way: using Audio Toolbox.

Luckily, I found this excellent article by Gene De Lisa. His SwiftSimpleGraph project has all the boilerplate code to create sine waves, so all I had to do was add a user interface.

My project contains four ToneWidget instances, each of which contain numeric dials for frequency and velocity and asine wave renderer. There's also an additional sine wave renderer that displays the composite frequency of all four channels.

The sine wave renderer creates a bitmap image of the wave based on some code Joseph Lord tweaked in my Swift reaction diffusion code in the summer. It exposes a setFrequencyVelocityPairs() method which accepts an array ofFrequencyVelocityPair instances. When that's invoked, the drawSineWave() method loops over the array creating and summing vertical values for each column in the bitmap:

for i in 1 ..< Int(frame.width)

{

let scale = M_PI * 5

let curveX = Double(i)

var curveY = Double(frame.height / 2)

for pair in frequencyVelocityPairs

{

let frequency = Double(pair.frequency) / 127.0

let velocity = Double(pair.velocity) / 127.0

curveY += ((sin(curveX / scale * frequency * 5)) * (velocity * 10))

}

[...]

Then, rather than simply plotting the generated position for each column, it draws a line between the newly calculated vertical position for the current column and the previous vertical position for the previous column:

for yy in Int(min(previousCurveY, curveY)) ... Int(max(previousCurveY, curveY))

{

let pixelIndex : Int = (yy * Int(frame.width) + i);

pixelArray[pixelIndex].r = UInt8(255 * colorRef[0]);

pixelArray[pixelIndex].g = UInt8(255 * colorRef[1]);

pixelArray[pixelIndex].b = UInt8(255 * colorRef[2]);

}

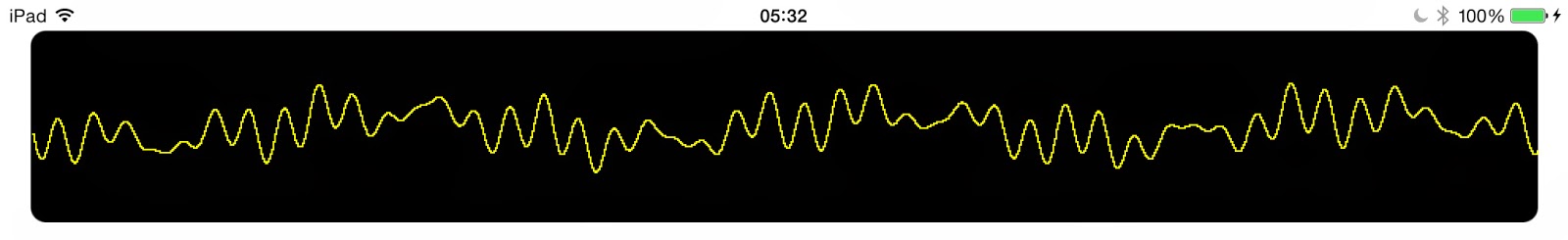

The result is a continuous wave:

Because the sine wave renderer accepts an array of values, I'm able to use the same component for both the individual channels (where the array length is one) and for the composite of all four (where the array length is four).

I was ready to use GCD to do the sine wave drawing in a background thread, but both the simulator and my old iPad happily do this in the main thread keeping the user interface totally responsive.

Up in the view controller, I have an array containing each widget and another array containing the currently playing notes which is populated inside viewDidLoad():

override func viewDidLoad()

{

super.viewDidLoad()

view.addSubview(sineWaveRenderer)

for i in 0 ... 3

{

let toneWidget = ToneWidget(index: i, frame: CGRectZero)

toneWidget.addTarget(self, action: "toneWidgetChangeHandler:", forControlEvents: UIControlEvents.ValueChanged)

toneWidgets.append(toneWidget)

view.addSubview(toneWidget)

currentNotes.append(toneWidget.getFrequencyVelocityPair())

soundGenerator.playNoteOn(UInt32(toneWidget.getFrequencyVelocityPair().frequency), velocity: UInt32(toneWidget.getFrequencyVelocityPair().velocity), channelNumber: UInt32(toneWidget.getIndex()))

}

}

When the frequency or the velocity is changed by the user in any of those widgets, I use the value in thecurrentNotes array to switch off the currently playing note, update the master sine wave renderer and play the new note:

func toneWidgetChangeHandler(toneWidget : ToneWidget)

{

soundGenerator.playNoteOff(UInt32(currentNotes[toneWidget.getIndex()].frequency), channelNumber: UInt32(toneWidget.getIndex()))

updateSineWave()

soundGenerator.playNoteOn(UInt32(toneWidget.getFrequencyVelocityPair().frequency), velocity: UInt32(toneWidget.getFrequencyVelocityPair().velocity), channelNumber: UInt32(toneWidget.getIndex()))

currentNotes[toneWidget.getIndex()] = toneWidget.getFrequencyVelocityPair()

}

The updateSineWave() method simply loops over the widgets, gets each value and passes those into the sine wave renderer:

func updateSineWave()

{

var values = [FrequencyVelocityPair]()

for widget in toneWidgets

{

values.append(widget.getFrequencyVelocityPair())

}

sineWaveRenderer.setFrequencyVelocityPairs(values)

}

Once again, big thanks to Gene De Lisa who has really done all the hard work navigating the Core Audio and Audio Toolbox API research on this. All of the source code for this project is available at my GitHub repository here.

Published at DZone with permission of Simon Gladman, DZone MVB. See the original article here.

Opinions expressed by DZone contributors are their own.

.jpg)

Comments